Is AI “asbestos in the walls of our tech society”?

Whether I open LinkedIn, turn on the TV, read the news, listen to a podcast, or catch up with a friend, I’m almost guaranteed to encounter some form of AI marketing, hype, expectation, or speculation.

As a self‑declared advocate for AI adoption, I want to stay grounded in the very real, intelligent, and objective criticisms of this still‑fledgling technology. This grounding is what I believe will differentiate Placid Works from its competition and help fulfill the promise of taking an incremental, measured, and pragmatic approach to AI.

Two recent media items, in particular, sharpened my thinking.

Is AI the Next Tech Bubble?

The first was an alarming and incisive piece by blogger, journalist, and science‑fiction author Cory Doctorow in The Guardian, in which he wrote:

“AI is asbestos in the walls of our tech society.”

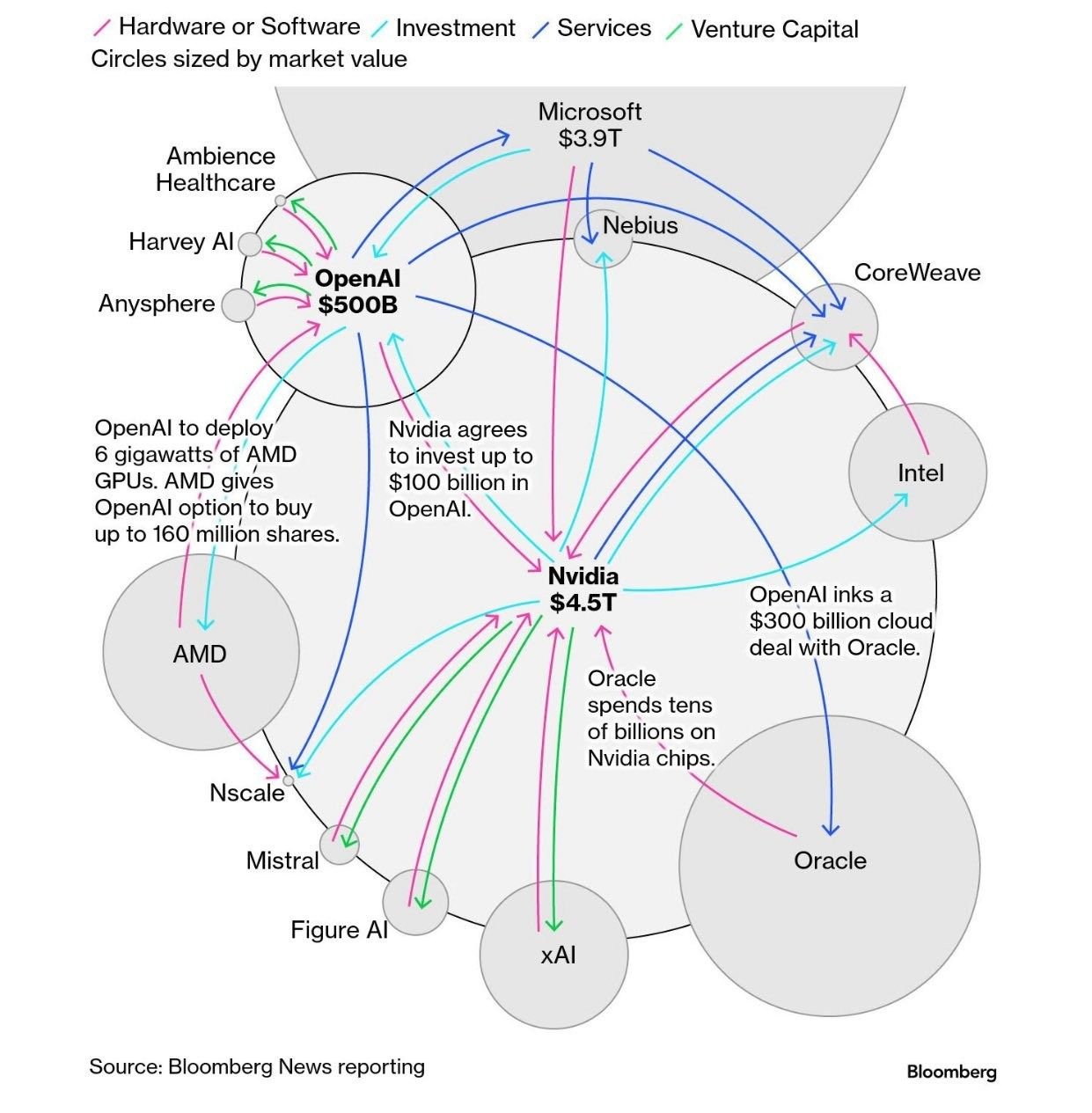

Doctorow argues that the AI boom is an unsustainable financial bubble—one driven less by genuine technological capability and more by monopolistic tech giants desperate to maintain “growth stock” valuations. These companies, already dominant in markets like search, mobile, and advertising, are using AI as the next hype cycle to convince investors that exponential growth is still possible, even as their core markets reach saturation.

On this point, I’m inclined to agree—particularly regarding the unsustainable nature of stock growth fueled by circular investment announcements.

Lessons From the Dot‑Com Era

I started my career in 2000, joining a dot‑com company in London straight out of college. It was an incredible time to enter IT. Major breakthroughs arrived rapidly, and optimism seemed boundless. Of course, we also know how that era ended—and so did my 21‑year‑old self.

The hype and speculation were enormous, and near‑term reality didn’t live up to the promise. Twenty‑five years later, I can see that many of those ideas were directionally correct—but simply too early or not mature enough at the time.

My personal view is that we’re in a similar near‑term AI bubble today, and that it will correct significantly. Unfortunately, I do think this will affect the “Magnificent Seven,” and by extension the S&P 500—and, therefore, retirement savings. The good news is that I don’t see any of these companies going out of business anytime soon. Long‑term investors will likely be just fine a decade or two from now.

Despite my short‑term concerns, I still believe many aspects of today’s AI boom are directionally correct—much like foundational internet and e‑commerce trends proved to be in the long run. I expect a similar outcome with AI. The quote often attributed to Mark Twain feels especially apt here:

“History does not repeat itself, but it rhymes.”

The “Reverse Centaur” Concern

Doctorow also introduces the idea of the “reverse centaur”—a workplace model where humans become appendages to flawed AI systems rather than being empowered by them. His examples include Amazon delivery drivers heavily monitored by AI cameras, or medical and technical professionals reduced to supervising AI tools while bearing the blame when those tools fail. In this model, AI doesn’t replace labor outright; instead, it degrades job quality while enabling cost‑cutting and accountability avoidance.

Frankly, I’m less concerned about this dystopia becoming widespread—at least in my corner of the business world.

Doctorow is right to raise the concern. There’s a clear parallel to social media and algorithmic feeds, where humans increasingly stop thinking about what’s next because “the algorithm” does it for them. Still, I see several reasons why the reverse centaur is unlikely to dominate:

The stated goal of AI adoption is human leverage, not human degradation.

In many cases, AI is meant to handle laborious or repetitive tasks so humans can focus on higher‑order work. An Amazon truck may eventually not need a human driver—but humans ensuring fleets of autonomous vehicles operate safely is a centaur model, not a reverse one.Human nature resists prolonged control.

I believe deeply in human ingenuity and our instinctive response to being constrained. People may tolerate tight control for short periods if the trade‑off seems fair, but history shows this isn’t sustainable long‑term.The technology simply isn’t that good—yet.

Despite the marketing and hype, AI isn’t delivering on its loftiest promises today, and it’s unlikely to do so in the near future.

That last point leads to the second media item that caught my attention.

Why Bigger Models Aren’t the Answer

Steve Eisman’s interview with Gary Marcus on The Real Eisman Playbook was a refreshing dose of realism. (I highly recommend the show if you’re interested in markets.)

Marcus—an AI researcher, cognitive scientist, and outspoken critic of modern deep learning—explains why Large Language Models (LLMs) are unlikely to deliver Artificial General Intelligence (AGI). He also argues that scaling models indefinitely leads to diminishing returns, even as costs (GPUs, energy, and capital) continue to rise.

This reinforces the idea of a market bubble driven by venture capital incentives—particularly the industry’s fixation on the 2% management fee—and by investments in ideas that sound compelling but may not hold up over the long term.

Useful, But Not Magical

While I’ve had many positive experiences with AI, I can’t ignore the limitations Marcus describes. Modern AI chat tools are objectively useful—especially at pattern matching and language tasks—but they remain a long way from AGI.

I also agree with his implicit point that we’re entering a “race to the middle”, as Placid Works describes it. Once models reach sufficient scale, there will be little to separate them, and simply adopting an AI chat tool won’t meaningfully differentiate a business.

To be clear: I still think you should adopt tools like Microsoft 365 Copilot. Early adopters will gain real advantages over those who delay. But that advantage won’t last forever.

Incremental Value Over Grand Promises

Even if Gary Marcus is entirely right about LLMs not leading us to AGI, we shouldn’t dismiss the real and meaningful utility they provide today. People are excited about AI adoption because many use cases genuinely improve productivity and decision‑making.

My focus—and the focus of Placid Works—is on identifying those use cases, promoting them responsibly, and setting realistic expectations about what today’s tools can and cannot do. This is a long journey, and there will be many more AI phases ahead.

Final Thoughts

AI is here to stay, and I’m betting my business on it. But progress doesn’t require blind optimism. By engaging seriously with thoughtful critiques and remaining disciplined in our expectations, we can make the next incremental, measured, and pragmatic decisions that lead to real innovation.

AI is not “asbestos.” But it does require careful handling.